Address

304 North Cardinal St.

Dorchester Center, MA 02124

Work Hours

Monday to Friday: 7AM - 7PM

Weekend: 10AM - 5PM

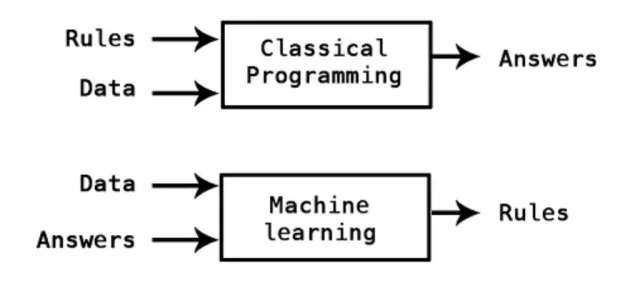

Traditional document verification and validation system heavily rely on conventional programming. The limitation of conventional programming is that it executes a predefined procedure (Rules) on a standard data set (Input data). However, given unlimited possibilities of real-world problem-solution scenarios, we need to combine classical programming with artificial intelligence and machine learning to detect fraudulent documents.

Financial and medical records, government agencies, custom and police authorities, private businesses, banks, insurance companies, and many other organizations have enormous and rapidly changing document verification and validation requirements.

The architecture described in this article effectively encapsulate such requirements. It offers time-saving and cost-effective document verification system to private and public organizations by combining conventional programming, machine learning on the AWS platform.

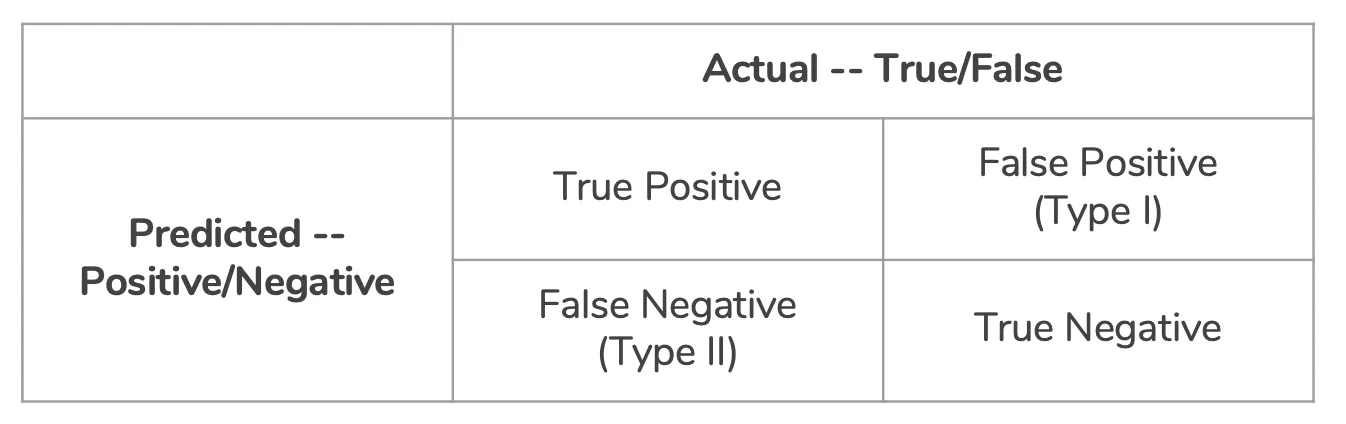

The machine learning algorithm should have higher accuracy to perform identification task to match business needs Confusion matrix algorithm is used to find the precision and accuracy of machine learning algorithm.

Ideally, the document verification and validation system should either identify (Predict) document as fake or authentic (Actual Values) with close-to-human accuracy.

As shown in the confusion matrix below, there can be Type 1 and Type 2 errors and the system has to compensate those errors. The critical distinction in the unique architecture proposed in this article is it compensate Type 1 and Type 2 errors using SageMaker.

This article highlights the importance of AWS services (Textract, Rekognition, and SageMaker) in building an automatic document verification and validation system. We have explained the three-layer analysis (Textual, graphical and document feature analysis) and their importance considering business requirements.

We are proposing a document verification and validation system (architecture) to satisfy the need of a variety of private and public organizations. This type of system demand flexible, secure, easily accessible and cost-effective data storage and machine learning platform to build, train and deploy models. It also requires APIs that can effectively perform Optical Character Recognition. Amazon AWS offers it all.

Considering business and technical requirements of this new document verification system, AWS offers features like scale, cost effectiveness, security, accuracy, and speed. Let’s briefly discuss each of them.

We are using Amazon SageMaker to build ML models. SageMaker uses streaming algorithms which are infinitely scalable and can consume unlimited data. Processing 20 GB of data is almost the same as that of processing 2000 GB of data. It can empowers multinational organizations to process their hundreds of thousands documents within hours.

Whether it is Amazon S3, Sagemaker, Textract or Rekognition, Amazon offers a pay-as-you-go pricing model. There are no minimum fees and no upfront commitments. So whether it is multinational organizations, small businesses or government agencies, they all can afford it easily.

SageMaker pricing is broken down by on-demand ML instances, ML storage, and fees for data processing in notebooks and hosting instances.

Rekognition only charges for images processed and Textract for the number of pages processed along with what you extract from the page.

Amazon Textract goes beyond conventional rule-based programming and simple Optical Character Recognition (OCR) to improve accuracy. It automatically extracts text and data from a scanned document. With the help of machine learning, Textract can process hundreds of documents within minutes.

The Rekognition API can identify objects, people, text, scenes, and activities from real-world images. We used it for text and object identification from the document.

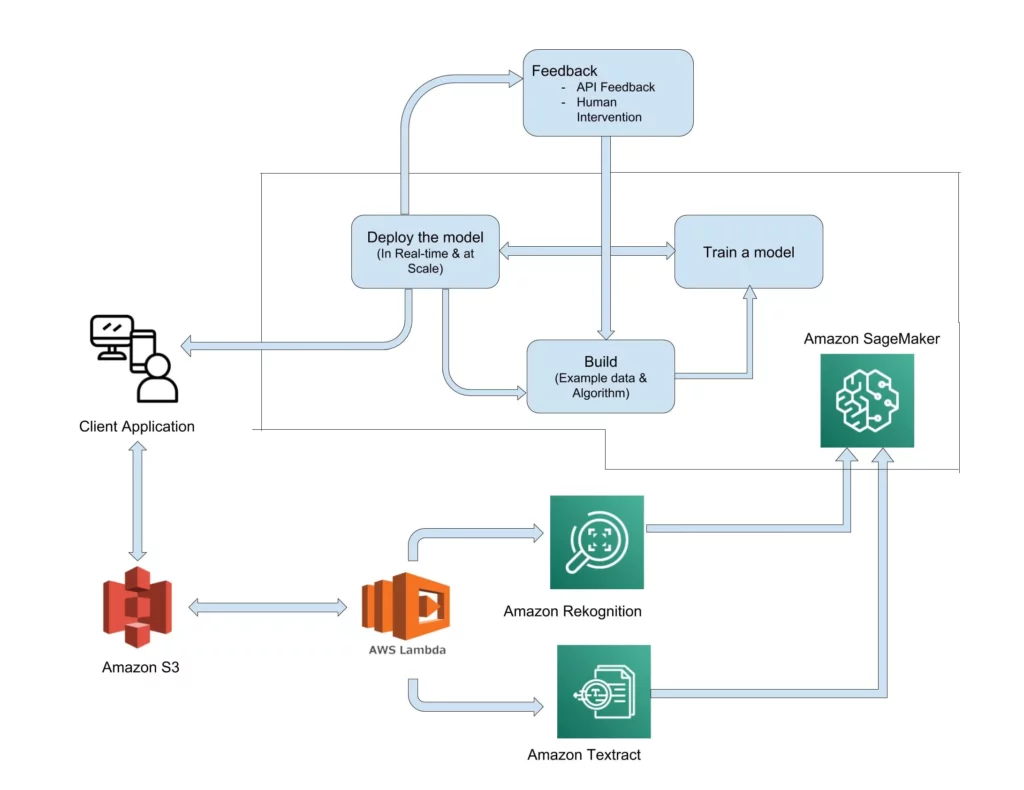

In the following reference architecture, we are ingesting documents from client application into the Amazon S3 bucket. Then using Textract and Rekognition for 1) Textual analysis, 2) Image analysis, and 3) Document feature analysis. Amazon SageMaker is used for machine learning along with a feedback mechanism to enhance verification accuracy.

The workflow starts with storing all your documents in Amazon S3 (Simple Storage Service). Amazon S3 enables you to securely and cost-effectively store, process and retrieve any amount of data efficiently. It also enables you to ingest data in various formats.

Based on size and format of documents and frequency with which you will upload them from client application for verification and validation, we will either select a simple API action (such as copying) or invoke AWS Lambda function (such as transcoding image) to ingest data in Amazon S3.

Once your documents are ingested in S3 bucket, we propose a three-layer analysis. The architecture is based on an idea that any document can be verified by analyzing three components that constitute every physical document ever created: Text, graphics and physical characteristics.

Hence we are performing textual analysis, image analysis and document feature analysis to verify the authenticity of the document.

This is the primary level of document verification also known as Optical Character Recognition (OCR) that deals with text in the document. We can identify the text and search the database to see if we can assign a category to the document attempting to identify the document type. SageMaker and Textract will enable us to perform a textual analysis. It take care of the errors such as any tilt occurred during scanning of the document.

This is the second layer in the architecture to analyze the graphical component of the document. To verify the document authenticity, it will analyze the logo, figures, text sections etc. So if a passport is being verified, image analysis can predict its authenticity by checking the position, movement, color, and accuracy of the logo, stamp, and signature.

Every document has specific physical characteristics such as color, thickness, texture, depth, size, weight and layout. Based on accessible physical characteristics, our machine learning module will try to identify fake documents.

We will use Textract and Rekognition APIs to perform this three-layer analysis. This analysis can be used to build, train and deploy the machine learning module using SageMaker.

Amazon has already trained Textract machine learning models on tens of millions of documents from virtually every industry, including invoices, receipts, contracts, tax documents, sales orders, enrollment forms, benefit applications, insurance claims, policy documents and many more.

Moreover, to enhance architecture accuracy, we are going beyond Textract to also use Rekognition and SageMaker along with human intervention.

Rekognition returns the detected text, confidence score in the accuracy of the result, type of detected text and objects such as logo, signature. It also provides coordinates of a detected object. We will compare the features of the document against the database for document validation.

Amazon SageMaker has three stage workflow: build, train and deploy. In the Build module of the SageMaker, we can work with the data received from Textract and Rekognition, experiment with an algorithm and visualize the output.

To train the model for document verification and validation, we can simply provide the location of data in S3, type and quantity of Amazon SageMaker ML instances and the SageMaker will train the model in a single click.

SageMaker provides one-click deploy, and the output can be easily fetched to the end-user application through a secure HTTP connection. Example: Document verification and validation counter at the airport or customs.

Our goal is to improve system accuracy consistently to automate document verification. A feedback mechanism is used to optimize the advantages of machine learning.

API feedback will be provided based on a specific organization or business requirement.

The system can have a human interface where humans can provide feedback such as whether the identified document classification is correct or not. This feedback is incorporated when processing new documents.

Human intervention workflow will support multiple levels. In the first level, we show the original document and the metadata the system extracted via an interface such as Amazon Mechanical Turk and get human feedback to validate if the finding is correct.

Each randomly selected outcome is shown to at least three different humans and accepts the feedback only if all 3 feedback were consistent. If the results differ, a given human input is discarded and shown to a different set of humans for identification. This process continues until a group of humans forms a consensus. As the iteration increases, the document is presented to humans whose expertise is considered to be higher than the previous group. The idea is that the first group of people can be interns, entry level professionals or low-skilled professionals who can verify the computer results. Only the subset of documents that these users don’t form consensus with goes to the next higher credibility users. The user’s get credibility rank increased or decreased based on the outcome.

Existing document verification and validation systems has many limitations due to their reliance on conventional programming. Upgrading these systems to embed machine learning along with traditional programming will increase the accuracy of document verification and validation. It will save time and money for all sizes of private and public entities. Hence we proposed a three-layer architecture that leverage AWS services to effectively build a document verification and validation system that will not only perform better but its accuracy will automatically improve over time.

TechnoGems engineers has extensive experience developing various AI/ML based solutions. Our engineers and architects can help you build your next AI solution. Contact TechnoGems at inquiries@technogemsinc.com to find out more details.

AI Testing Tools for Web Apps Best AI Testing Tools for Web Apps As enterprise applications get more complicated and automation suites contain thousands of test scripts, AI-powered tools for test Web Apps are the newest hot topic in the field of Web Applications. The key benefit of AI-powered tools over conventional tools like Selenium […]

Employee TimeCard — Remote Work — Geofencing Employee TimeCard Employee TimeCard is the perfect solution for business with ONSITE OFFSITE, and MOBILE employees where TIME and LOCATION need to be accounted. Employee TimeCard collects time and GPS location allowing employers to setup GEOFENCING and confirm the location of their employees as they work onsite, offsite […]

DevOps for Mobile Apps How to build and launch hundreds of applications in days? Software developers are often challenged with building custom apps for different markets or different industries, or different customers from a base application template. These are often developed as white label solutions. These custom apps can improve branding and customer retention. Brand […]

AI-enabled Open source Framework to optimize Machine Learning Enabling developers to train machine learning models once and run them anywhere in the cloud and at the edge. Amazon SageMaker Neo runs fast on less memory consumption, with no loss of accuracy. Challenge Machine learning offers a wide variety of benefits but it is hard for […]

Modernize Your Application with AWS A modern approach to deliver ‘innovation’ and ‘value’ to your customers! Within the cloud computing world, Amazon Web Service (AWS) is the most acceptable technology. It is set to be most prominent and successful service with its size and presence in the field of application development. Being a secure cloud […]

DevOps – A Modern Approach to Software Delivery Technology has evolved over time. And with technology, the ways and needs to handle technology have also evolved. Last two decades have seen a great shift in computation and also software development life cycle. The key to quickly fixing delays in software development lifecycle lies in establishing […]

Exploring the Future of Web Application Development In the ever-evolving landscape of technology, web application development stands as a pivotal force driving innovation and business expansion. These applications have seamlessly integrated into our daily lives, offering versatility and convenience that positions them as the preferred choice for businesses aiming to provide a smooth and hassle-free […]