Address

304 North Cardinal St.

Dorchester Center, MA 02124

Work Hours

Monday to Friday: 7AM - 7PM

Weekend: 10AM - 5PM

Machine learning offers a wide variety of benefits but it is hard for IT professionals to deploy these models without prior experience of frameworks. Moreover, each time before deploying, the developers need to alter the models as per the platform’s hardware and software configuration. Sometimes it becomes very difficult to quickly build, deploy and maintain machine learning applications and in turn it reduces the speed and enhances chances of lack of accuracy.

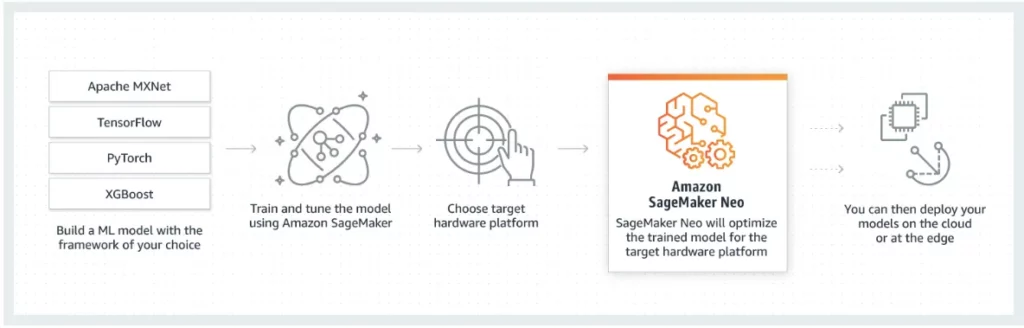

By Introducing Amazon SageMaker Neo, a new capability of Amazon SageMaker that enables machine learning models to train once and run anywhere in the cloud and at the edge with optimal performance. It is equipped with modern algorithms to overcome various challenge that developers and IT professionals face.

Amazon’s initial release of SageMaker was exclusively targeted for the training phase of ML models. The platform allowed the developers and data scientists huge space for uploading large datasets and its High-end GPU’s and CPU’s enabled them to run training jobs distributed across multiple machines. The inference testing was also provided by uploading the trained model to a destination S3 bucket. Amazon SageMaker later integrated itself with Python and Jupyter Notebook which made it a favorite go-to destination for developers testing ML models.

The inference testing of the trained ML-models are often deployed in highly constrained environments making it difficult for developers to test its performance. Developers tackle this challenge by compiling the trained model for a target device which ensures an optimized version. But the models have to be tested for inference in different target environments individually. Amazon SageMaker Neo solves this challenge of ML modelling by optimizing them for a variety of target environments. With the new platform, developers now can feed a fully trained ML model to Neo and can generate an executable which is fully optimized for the specified target environment. Developers can now undertake both phases of ML modelling in the cloud without leaving Amazon’s platform.

At last year’s re: Invent 2018 conference in Las Vegas, Amazon took the wraps off SageMaker Neo, a feature that enabled developers to train machine learning models and deploy them virtually anywhere their hearts desired, either in the cloud or onpremises. It worked as advertised, but the benefits were necessarily limited to AWS customers — Neo was strictly a closed-source, proprietary affair.

Without any manual intervention, Amazon SageMaker Neo optimizes models deployed on Amazon EC2 instances, Amazon SageMaker endpoints and devices managed by AWS Greengrass.

Frameworks and algorithms: TensorFlow, Apache MXNet, PyTorch, ONNX, and XGBoost

Hardware architectures: ARM, Intel, and NVIDIA starting today, with support for Cadence, Qualcomm, and Xilinx hardware coming soon. In addition, Amazon SageMaker Neo is released as open source code under the Apache Software License, enabling hardware vendors to customize it for their processors and devices.

The Amazon SageMaker Neo compiler converts models into an efficient common format, which is executed on the device by a compact runtime that uses less than one-hundredth of the resources that a generic framework would traditionally consume. The Amazon SageMaker Neo runtime is optimized for the underlying hardware, using specific instruction sets that help speed up ML inference.

Most machine learning frameworks represent a model as a computational graph: a vertex represents an operation on data arrays (tensors) and an edge represents data dependencies between operations. The Amazon SageMaker Neo compiler exploits patterns in the computational graph to apply high-level optimizations including operator fusion, which fuses multiple small operations together; constant-folding, which statically pre-computes portions of the graph to save execution costs; a static memory planning pass, which preallocates memory to hold each intermediate tensor; and data layout transformations, which transform internal data layouts into hardware friendly forms. The compiler then produces efficient code for each operator.

Once a model has been compiled, it can be run by the Amazon SageMaker Neo runtime. This runtime takes about 1MB of disk space, compared to the 500MB-1GB required by popular deep learning libraries. An application invokes a model by first loading the runtime, which then loads the model definition, model parameters, and precompiled operations.

Amazon SageMaker Neo allows machine learning models to train once and run anywhere in the cloud and at the edge. Ordinarily, optimizing machine learning models to run on multiple platforms is extremely difficult because developers need to hand-tune models for the specific hardware and software configuration of each platform. Neo eliminates the time and effort required to do this by automatically optimizing TensorFlow, MXNet, PyTorch, ONNX, and XGBoost models for deployment on ARM, Intel, and Nvidia processors today, with support for Cadence, Qualcomm, and Xilinx hardware coming soon. You can access SageMaker Neo from the SageMaker console, and with just a few clicks, produce a model optimized for their cloud instance or edge device. Optimized models run up to two times faster and consume less than one-hundredth of the storage space of traditional models.

Developers spend a lot of time and effort to deliver accurate machine learning models that can make fast, low-latency predictions in realtime. This is particularly important for edge devices where memory and processing power tend to be highly constrained, but latency is very important. For example, sensors in autonomous vehicles typically need to process data in a thousandth of a second to be useful, so a round trip to the cloud and back isn’t possible. Also, there is a wide array of different hardware platforms and processor architectures for edge devices. To achieve high performance, developers need to spend weeks or months hand-tuning their model for each one. Also, the complex tuning process means that models are rarely updated after they are deployed to the edge. Developers miss out on the opportunity to retrain and improve models based on the data the edge devices collect.

Amazon Web Services added multiple capabilities in its Machine learning platform as a service Amazon SageMaker. A new extension to this service SageMaker Neo has also been announced by the company which will be instrumental in optimizing trained ML-models for targeted deployment. The new Neo-AI project is open-sourced making it possible for software and hardware vendors to extend the capabilities of the program in accordance to their needs.

Amazon has launched Neo-AI, a new open source project that aims to optimize the performance of machine learning (ML) models for numerous platforms.

Amazon SageMaker Neo was announced as an extension of Amazon SageMaker, an ML platform as a service.

SageMaker initially targeted the training phase of ML models, and SageMaker Neo takes on the biggest challenge of optimizing ML models for diverse target environments, effectively closing the loop between the training and inference phases of ML models.

SageMaker Neo can compile ML models built using Apache MXNet, TensorFlow, and PyTorch, among others, needing only a fully-trained model and target platform as input parameters to generate a native, fully-optimized model ready for inference.

Currently, SageMaker Neo supports NVIDIA Jetson TX1, NVIDIA Jetson TX2, Raspberry Pi 3, and AWS DeepLens as target deployments, with support for Xilinx, Cadence.

MXNet, TensorFlow, PyTorch, or XGBoost

Hardware Architecture: Intel, Nvidia, or Arm

For Sage maker neo –AI

Framework and algorithm: TensorFlow, Apache MXNet, PyTorch, ONNX and XGBoost.

Hardware Architecture: ARM, Intel and NVIDIA will be supported from today, and will soon launch support for Cadence, Qualcomm and Xilinx hardware. AWS said that all of these companies, except NVIDIA, will contribute to the project.

Amazon SageMaker Neo converts the framework-specific functions and operations for TensorFlow, MXNet, and PyTorch into a single compiled executable that can be run anywhere. Neo compiles and generates the required software code automatically.

Easy and Efficient Software Operations Amazon SageMaker Neo outputs an executable that is deployed on cloud instances and edge devices. The Neo runtime reduces the usage of resources such as storage on the deployment platforms by 10x and eliminates the dependence of frameworks. As an example, the Neo runtime occupies 2.5MB of storage compared to framework dependent deployments that can occupy up to 1GB of storage.

Neo is available as open source code as the Neo-AI project under the Apache Software License. This enables developers and hardware vendors to customize applications and hardware platforms, and take advantage of Neo’s optimization and reduced resource usage techniques.

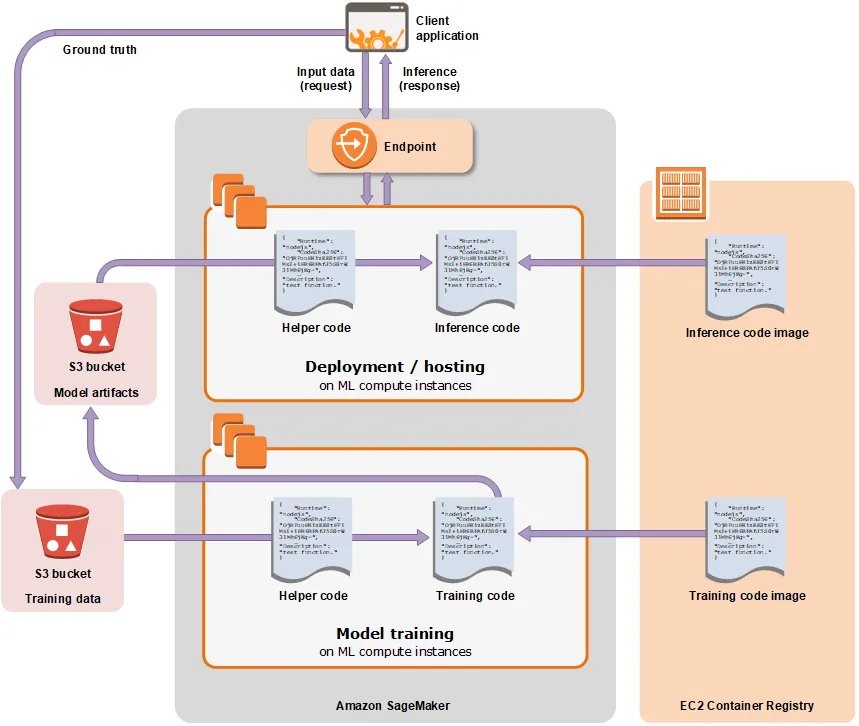

Amazon SageMaker also provides model hosting services for model deployment, as shown in the following diagram. Amazon SageMaker provides an HTTPS endpoint where your machine learning model is available to provide inferences.

As we all know, for edge devices, machine learning model optimization is often limited by computing power and storage, because it is too far away from the cloud computing center, and can only be manually adjusted by developers. Neo-AI came to solve this problem. What are the benefits of launching this Neo-AI project? AWS also gives some answers, which are:

The speed of the conversion model can be up to two times, and the accuracy will not be reduced.

Today, complex models can be run on almost any resource limited device, unlocking the innovative use cases of self-driving cars, safety devices and anomaly detection in manufacturing.

Developers can run models on target hardware, independent of frameworks. Neo-AI runtime does not take up much space, only 1 M of disk space (500M-1GB for popular deep learning libraries), and Neo-AI runs mainly at Amazon SageMaker Neo runtime.

Today, 2018, we are more inclined than ever to a ubiquitous computing world in which technology can help change every consumer and business experience. For developers, the opportunities to use technologies such as AI, IoT, and server-less computing, containers have never been so powerful.

AI Testing Tools for Web Apps Best AI Testing Tools for Web Apps As enterprise applications get more complicated and automation suites contain thousands of test scripts, AI-powered tools for test Web Apps are the newest hot topic in the field of Web Applications. The key benefit of AI-powered tools over conventional tools like Selenium […]

Employee TimeCard — Remote Work — Geofencing Employee TimeCard Employee TimeCard is the perfect solution for business with ONSITE OFFSITE, and MOBILE employees where TIME and LOCATION need to be accounted. Employee TimeCard collects time and GPS location allowing employers to setup GEOFENCING and confirm the location of their employees as they work onsite, offsite […]

DevOps for Mobile Apps How to build and launch hundreds of applications in days? Software developers are often challenged with building custom apps for different markets or different industries, or different customers from a base application template. These are often developed as white label solutions. These custom apps can improve branding and customer retention. Brand […]

Modernize Your Application with AWS A modern approach to deliver ‘innovation’ and ‘value’ to your customers! Within the cloud computing world, Amazon Web Service (AWS) is the most acceptable technology. It is set to be most prominent and successful service with its size and presence in the field of application development. Being a secure cloud […]

ML Based 3-Stage Document Verification and Validation System using AWS Existing document verification and validation systems based on conventional programming has many limitations. However, we can introduce machine learning models to increase their accuracy and effectiveness to save time and money for all sizes of private and public entities. Introduction Traditional document verification and validation […]

DevOps – A Modern Approach to Software Delivery Technology has evolved over time. And with technology, the ways and needs to handle technology have also evolved. Last two decades have seen a great shift in computation and also software development life cycle. The key to quickly fixing delays in software development lifecycle lies in establishing […]

Exploring the Future of Web Application Development In the ever-evolving landscape of technology, web application development stands as a pivotal force driving innovation and business expansion. These applications have seamlessly integrated into our daily lives, offering versatility and convenience that positions them as the preferred choice for businesses aiming to provide a smooth and hassle-free […]